I wrote this article based on interviews I had with UserEvidence’s Leadership team during my onboarding. Together, they boast 30+ years of experience in the customer marketing and survey space, including five years at SurveyMonkey. Said differently, these guys know their stuff, and you won’t find any vanilla best practices here. They’re tried and true.

Who doesn’t love some friendly in-office competition?

From trivia at the company holiday party to New Year’s fitness challenges, competition can be a healthy way to up engagement.

But it’s not always healthy. Have you ever butted heads with other go-to-market teammates because you both want your customers’ feedback and time for surveys and interviews? If you have, you’re in good company.

UserEvidence Co-founder and Chief Product Officer Ray Rhodes says that, at any given time, every function on the GTM team is trying to collect customer feedback. From asking for G2 reviews and feature feedback, to running NPS programs, everyone’s looking for intel.

As you can imagine, these siloed requests can quickly overwhelm even your happiest power users. Ray says it well: “Everyone’s competing for this customer touchpoint. That’s a really big problem.”

Surveying your customers shouldn’t be an “us vs. them” battle, full stop.

If you plan and create your surveys thoughtfully, make the most of every customer touchpoint, and collaborate well enough to achieve each GTM team’s goals, you set yourself up for strong results.

But what does it take to make an amazing survey?

I turned to UserEvidence’s in-house experts to answer this. Along with Ray, I talked to Co-founder and CEO Evan Huck, and VP of Customer Success Myles Bradwell. From those chats, I rounded up their five best practices for designing surveys that help everyone win. Here’s what they shared.

1. Start with what you want to accomplish

When SaaS companies first set out to build a survey, “What question should I ask?” is the wrong question to ask, according to Myles. Instead, begin by defining your target audience.

- Do you want to learn from current customers?

- Potential customers?

- Ideal users of a new feature you’re launching?

Clarify this before going further.

Next, identify what you want to say to them and what you want to achieve. Evan explains that the UserEvidence team coaches people to write the “dream claim” they’d have based on the survey data.

Maybe you decide that you’d like to say something like, “70% of customers that switched from X competitor grew their win rates by 10%.” Based on that dream claim, you’ll need to ask about:

- The competitors they used before

- Their win rates before using your product

- Their win rates since using your product

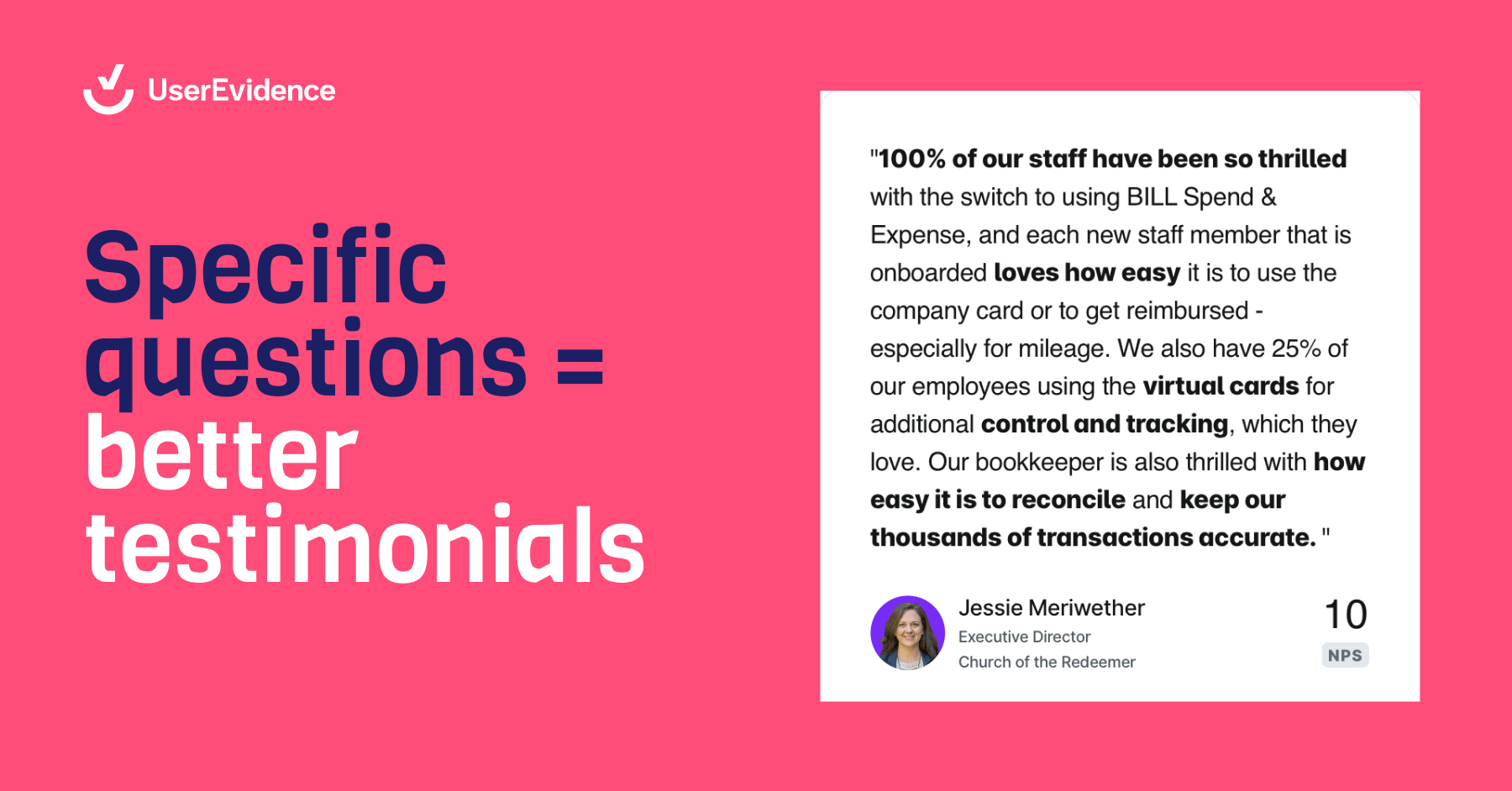

This approach helps you get specific with your questions, too. If you want to tell the story that your product saves time or increases revenue, don’t ask, “Do you like our product?”

Instead, your questions should sound like, “How many minutes per day do you save with our product?” or “How much additional revenue per quarter do we drive for you?”

Myles agrees: if you define your goal and the claim you want to make before building your survey, “the question parts take care of themselves.”

2. Get clear on what you’ll do with survey responses

No one spends their precious time and energy building a survey with responses that spend the rest of their life hidden away in a Google Drive folder. (At least, not on purpose.)

You want your survey results to prove a point or paint a picture of how awesome your product is. That way, they can fuel sales conversations, inform marketing collateral, and strengthen customer success (CS) relationships.

In short, you need to think about your output upfront:

What deliverables are you going to create, and which functions within your GTM team will use them?

Maybe you’ve got gaps in your case study library, and sales wants to reach a specific audience with a knockout piece of content. To create the desired output, you’ve got to know about those needs before building your survey, and that requires an open feedback loop between marketing and sales.

“If we don’t know what customers are really wanting and what sales wants to be able to say, we’re not going to produce the right stuff,” Myles explains.

Now is also the time to decide if you’re going to meet multiple teams’ needs in a single survey. CS needs different feedback and insights than product management, and it’s the same story with sales and marketing.

But really, that’s no problem because you can maximize your efforts by asking each team what insights they want the survey to capture.

Evan explains that UserEvidence’s last customer survey, for instance, gave each GTM function three or four questions without overloading survey-takers with an endless form. “We got a ton from that survey because we were actively thinking about which function or who in the company this data and feedback was going to serve.”

3. Choose the right kind of survey

Not all surveys are created equal. Yes, that’s because some ask the wrong questions (more on that later), but it’s also because different survey types meet different goals.

B2B companies commonly collect customer feedback via one of three survey categories:

1. Foundational

When most people think of a generic “survey,” they think of a census survey, which polls an entire customer base; Myles calls this “casting a wide net.”

But foundational surveying can be more targeted, too, designed to capture in-depth feedback from subsets of your audience — you might survey only the customers who use a particular product or feature or reach out to those from a certain region.

2. Customer journey

Did your customer just sign their contract, onboard with your CS team, or take a key action in your platform? Those milestones signal that it’s the perfect time to survey your customers about:

- Why they bought

- How you differ from competitors

- What value they’re getting from your product

We’re huge advocates for the “always-on” approach to feedback:

Rather than running an annual mega-survey or frantic yearly push for G2 reviews, build a feedback cadence based on key moments in the customer journey. That way, you’re always surveying individuals at the right times to get recent and relevant feedback.

3. Advanced

More advanced surveys let you dive even deeper — gathering testimonials, running community listening, recruiting customer advisory board members, and even asking someone who should’ve been a customer why they ended up closed-lost.

These are more in-depth or nuanced surveys, so you should be especially strategic about whom and how you ask.

If you’re stumped on which type to go with, go back to that first best practice and look at your goals for the survey research.

Are you gathering several types of feedback for multiple teams? You’re probably looking for a foundational survey.

Do you need timely feedback that works best after a moment of value? Look at a customer journey-focused survey.

Your questions and distribution strategy will follow naturally from your survey type, so it’s important to get this right.

4. Know the difference between good and bad questions

We’ve all taken surveys we enjoyed and others that made us tear our hair out. (Most of us probably end up abandoning ship partway through the latter kind of survey.)

But can you pinpoint what makes those terrible surveys so awful and what it takes to achieve survey greatness? Here’s how the UserEvidence experts break it down.

Bad surveys are too long and fail to optimize for meaningful results

Myles, Ray, and Evan all agree on this: Asking too many questions is the kiss of death for any survey.

And 2023 Typeform research backs them up — with each question you ask, you increase your dropoff rate.

Evan says that some of the worst offenders are lengthy “matrix questions.” You might ask “How would you rate these features on a scale of 1 to 5?” That’s one question, right? Not if you ask your customers to rate 20 features… and then do it again for several more survey screens.

That’s a ton of thinking and question-answering for even your most committed customers.

Please, for the love of data, don’t be that survey.

Myles points to asking too many open-ended questions as equally concerning, citing that these “brain-heavy” questions exhaust survey takers. For example, he advises using a maximum of two testimonial-type questions.

At the other end of the spectrum, Ray says that bad surveys ask questions that are too specific. “They’re chasing perfection, as opposed to optimizing for more data,” he explains.

Evan also calls out multiple-choice questions with too many answer options: “From a data perspective, on the back end, the results get so diluted that they’re meaningless.” Whether you’re asking about lead sources or customers’ favorite features, an overabundance of choices leads to unhelpful data and weak-sauce results.

Good surveys get specific, progress naturally, and stay organized

Instead of building a never-ending survey, make every question count. Every question should help accomplish your original goal for the research.

And speaking of your goals, this is where your upfront work comes in handy. Myles advises giving respondents clear instructions in the wording of open-ended questions.

Do you want numbers in a testimonial question? Ask them to include those.

Are you looking for the positive parts of the customer experience? A good question leans into that goal by asking, “How has our product helped your business grow?” instead of “What do you think about our product?”

What about the multiple-choice pitfalls we mentioned before?

Evan suggests whittling down questions that have, say, 27 potential choices down to a tighter list of five to eight options. “Put these answer options into fewer buckets so you get a more meaningful signal from each question.”

Shifting gears a bit, here’s another tip: A strong survey isn’t just about which individual questions you ask, but rather the order in which you ask them. This is what Evan calls “a progressive arc.”

Start by warming up respondents with broader questions — such as, “Why did you buy?” or “What features are most relevant?”

Once you’ve grounded them in the context of the product, “questions around ROI, performance, and impact start to make more sense,” Evan says. “It should have a section-based flow to it.”

Be sure to group your questions based on topic, too — pair a multiple-choice question about which competitors they considered with an open-ended question about why they chose your solution instead of another one.

As a design principle, you’re not only helping your customers answer well, but you’re encouraging meaningful stories from the data.

5. Customize the survey experience

The customer base for every B2B company is made up of a diverse group of users in different functions and at varying levels of experience.

If you’re surveying them all, that’s a good thing; multi-faceted feedback lets you tell a more complex story about account health and product value for different personas.

Plan for this diversity in your audience by customizing the survey experience. For example, if you need different information from two personas within your customer base, you could deploy separate surveys in different contexts.

Again, go back to what you want to accomplish — your story and your desired output. If you can’t meet your needs with a single survey, put in the time to build multiple.

The data and the deliverables (not to mention your fellow GTM teammates) will thank you later.

Turn survey results into insights your buyers actually trust

When you nail survey design, you turn age-old silos across the GTM team into seamless collaboration, and you capture more thoughtful and actionable customer insights.

Plus, if you embrace an always-on approach to gathering feedback, you create the continuous flow of customer feedback you need for a rich library of relevant, up-to-date stories and proof points.

We’d love to help you remove friction from survey-sending, curate customer insights, and automate publishing the good stuff across your social channels.

Ready to put these survey design hacks to work and build better trust with your future buyers? Learn how UserEvidence works today.